Back in the late '90s, when internet data was trickling into your system at around 56 Kbps, it was relatively easy for the computer to process the network packets it was receiving. But like football oversized shoulder pads, new episodes of Friends and, unfortunately, Stargate SG1, the days of 56 Kbps internet speeds are long gone. Most of the world enjoys internet connection speeds of a few hundred Mbps to a Gbps, if not more. In fact, 10 Gbps connections are becoming more prevalent and affordable by the day.

So how can edge routers process data packets coming in at 10 Gbps — or faster? As well, note that filling a connection - at any speed - with small packets, e.g. VoIP packets, is much more difficult than with large packets, e.g. file transfer packets. We'll talk more about this in a future blog post. One approach is to get packet processing out of your operating system (what we’ll refer to as 'kernel space') and over into 'user space'. So what does this 'geek speak' really mean and why should you care?

What Is Kernel Processing?

Let’s start with an understanding of what a kernel is, and what it does. And to keep it simple, let’s describe it from a ‘generic computer’ point of view - a product for which most of us are well familiar. We’ll tie it back to routers a little further down.

The kernel is the ‘boss’ of the operating system, and, by extension, everything a computer does. It contains all the drivers that devices like printers, touchpads, and anything that plugs into your USB port need to run. The kernel also manages memory, telling different apps and processes how much memory they can use, as well as how they can interface with different elements of your hardware.

In many ways, the kernel is like a traffic cop at a busy intersection. You can think of applications as vehicles - each with their own CPU, memory, storage, and network interfaces. The traffic cop decides which lane of traffic is allowed to proceed, and for how long. Not all vehicles are the same - motorcycles, cars, service trucks, 18-wheelers, etc. So the traffic cop takes that into consideration as he or she makes intersection use decisions.

Your computer’s kernel does the same thing. If a given application — say Microsoft Word — is running, the kernel decides how much memory it can use, and how it interfaces with your hard drive, screen, keyboard, and trackpad or mouse. Microsoft Word, in most cases, doesn’t need much of a traffic lane or timespan to get its job done. The kernel gives it brief access to a small portion of resources, and that’s sufficient.

On the other hand, a memory-intensive program like Pro Tools, which needs to process audio and video—as well as audio effects—in real-time, may need a traffic lane or two - and for a more extended period of time. Pro Tools require a lot of memory, and the kernel ensures it gets it. Pro Tools may also need to use a USB-connected audio interface, keyboard, and another MIDI controller. The kernel ensures it gets all of those resources as well—and that everything functions as it should.

Kernel Processing and Network Functions

Now, the kernel also manages networking functions - which includes all of the router-related work of receiving, processing (according to some set of protocols and policies), and sending of packets across one or more network interfaces.

It ought to be clear that the kernel can often find itself quite busy. So to parcel out its precious attention fairly, it traditionally processes each data packet one at a time, using an approach known as scalar processing. Here’s how that works with network data:

- The kernel receives a data packet

- It fetches an instruction (or instructions) regarding how to process it from an instruction cache

- It executes those instructions (if plural above) on the packet

- It then fetches the next packet

- Rinse and repeat

This means if you have an interface that’s bringing in data at a rate of 10 Gbps, the kernel needs to take each packet - one by one - and run the above steps 14.88 million (14,880,952 to be exact) times / second for tiny packets, and 812,744 times/sec for large packets, to fulfill its packet processing duty. Of course, there are ways to share the workload by strapping together multiple cores, but even high-performing cores will top out at 2 million packets per second. In other words, high throughput can quickly become expensive.

If the kernel gets overwhelmed, the entire system may crash, forcing an unpleasant reboot and cascade of follow-on problems, as a crash brings down everything else with it, including business-critical systems, and apps that rely upon routing.

Kernel processing presents a clear case of “all your eggs in one basket”. But there is an alternative, and that is user space processing. User space processing removes the heavy work of networking from the kernel - giving it more elbow room for its core responsibilities - enabling packets to be processed much faster and more efficiently.

What Is User Space Processing?

True to its namesake, user space is where most application processing takes place. Different from the kernel in many ways, user space also has far more space and freedom for unimpeded application instruction processing. Further, if an application hangs, or otherwise goes south, it is far less likely to be detrimental to the entire system than if it were running in kernel space. In fact, processes running in user space don't have unfettered access to kernel space. User space processes can only access a very small part of the kernel via an interface exposed by the kernel - known as system calls. If a process performs a system call, a software interrupt is sent to the kernel, which dispatches an interrupt handler, then continues its work after the handler has finished.

Why Should You Care?

The short answer is that you shouldn't, unless you are a software engineer, or someone looking to understand how a product like TNSR® can achieve astounding router performance on commercial-off-the-shelf hardware, freeing you from expensive, proprietary vendor lock-in solutions.

TNSR leverages VPP, which leverages User Space Processing

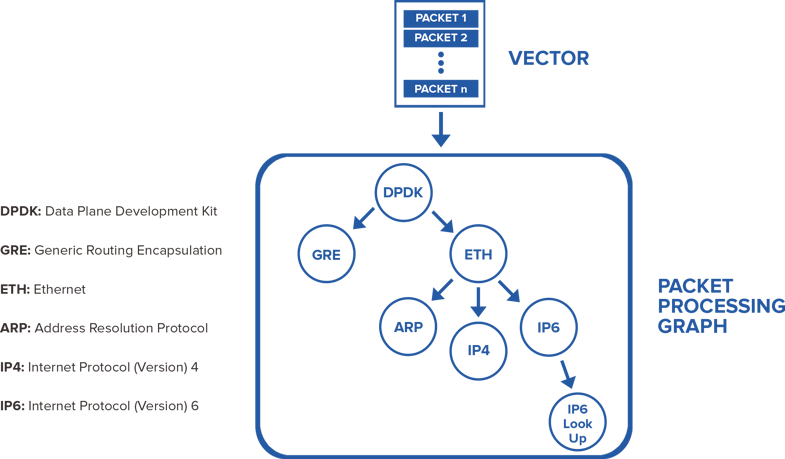

Vector Packet Processing (VPP), an open-source technology supported by the Linux Foundation FD.io project, performs packet processing in user space as opposed to kernel space. Short and sweet, VPP speeds up packet processing by grabbing many data packets at once and processing them as a group, i.e., what we call a vector.

A first packet serves as a sort of “warm-up” for the instruction cache. Other mechanisms ensure the ‘next packet’ is pulled into the data cache. The result is an extraordinary boost in throughput, far lower latency, and very reliable results. It’s akin to moving a barrel of marbles one shovel at a time, instead of a spoonful at a time. You can learn more about VPP here.

TNSR takes VPP, integrates it with other open source projects including Data Plane Development Kit (DPDK), Free Range Routing (FRR), strongSwan (IPSec/IKE) and more to create a high-performance software router, also known as a vRouter, or virtual router.

Head over to the TNSR software section of our website to learn more about the product, its applications, and how TNSR software can dramatically boost your edge and cloud router performance, as well as stretch your IT budget.

-01.png?width=926&height=181&name=Netgate%20Logo%20PMS%20(horizontal)-01.png)

%201.png?width=302&name=Netgate%20Logo%20PMS%20(horizontal)%201.png)