The $20 billion router market is poised for disruption by lower cost, more flexible software-based routers. Routing software and x86 hardware have simultaneously matured to the point where many routing applications can be performed in virtual appliances and no longer require specialized ASIC-driven hardware.

The router market has long been dominated by specialized hardware-based platforms designed to maximize WAN performance. The concept of software-based routers has been around for well over a decade. Earlier versions of software-based routing failed due to their lack of maturity and poor performance. The current generation of software router options is poised to benefit from the vastly improved processing and I/O capabilities of x86 servers.

Software-based routers aren’t ready to replace all hardware routers – especially not high-performance core routers. But the edge, and other segments of the router market will be impacted, and software-based routers are becoming an important consideration for network designers.

In addition, advances in server hardware performance mean that more routing functions are now in scope. For example, with the right software architecture, an Intel x86 processor can now handle up to 10 gbps of traffic with a single core, and this figure can scale given 8 to 22 cores possible per socket. Additionally, server hardware designers have worked with the networking community to deliver faster I/O and more efficient memory access.

The technology migration towards SDN and network function virtualization (NFV) will also accelerate the trends towards software routing. Software-defined networking and NFV disaggregate network functions, including routing, and allow them to run as virtual instances wherever the routing function is required. This disaggregation will take place in the data center (virtualized layer 3 functions), in the enterprise WAN, in the service provider network, and at the customer premise.

A full router needs a forwarding plane and a routing stack. The routing stack provides the intelligence as routing protocols to forward packets as well as a control plane. The forwarding plane provides the raw muscle to get packets through the system.

I introduced our overall strategy two years ago, in a piece titled “Further”. We’ve been hard at work ever since making that vision a reality.

At a very basic level, a network function is simply another workload. Like any workload, it consumes CPU cycles, memory and I/O. The typical compute workload mostly consumes CPU and memory with relatively little I/O. Network workloads are the inverse. They are by definition reading and writing a lot of network data. These are the packets going in and out of the physical or virtual NICs. Because processing is typically only done on the packet header, rather than the full packet body, the demand on CPU is mostly driven by the number of packets processed, rather than the size of those same packets.

While there are network functions like deep packet inspection (DPI) that are more CPU intensive, for the purposes of this post, we will consider network workloads mostly I/O-centric.

Since 40Gbps IPsec is our goal, let’s start filling in real-world numbers.

We first look at processing a line rate stream of packets on a 10 gbps Ethernet interface. If we look at the shortest possible Ethernet packet, we see 46 bytes of payload, another 18 bytes of Ethernet headers and CRC, and 20 bytes of preamble, start-of-frame delimeter, plus the inter-frame gap (IFG). This totals to 84 bytes, or 672 bits, so to handle true line-rate, we must process 10,000,000,000 bits per second, 672 bits at a time, or 14,880,952 packets per second.

Simple math also tells us each packet must be processed within 67.2 nanoseconds (ns). Simple math also tells us that a CPU core clocked at 2GHz has a core clock cycle of 0.5 ns. This leads to a budget of 134 CPU clock cycles per packet (CPP) on a single 2.0 Gigahertz (GHz) CPU core.

For 40GE interfaces, the per packet budget is 16.7 ns with 33.5 CPP and for 100GE interfaces it is 6.7 ns and 13 CPP. Even with the fastest modern CPUs, there is very little time to do any kind of meaningful packet processing. That’s your total budget per packet, to receive the packet on a given interface, process the packet, and transmit the packet out a presumably different interface.

If we only allow large packets, we still end-up with a difficult to solve problem. An Ethernet packet with a 1,500 byte payload still has the 18 bytes of Ethernet headers and CRC, and 20 bytes of preamble, SFD and IFG. Taken together, this totals to 1538 bytes, or 12,304 bits per packet. Even at large frame sizes, we must still process 10,000,000,000 bits per second, 12,304 bits at a time. This yields a lower, but still crisp 812,743 packets per second at 10Gbps, 3,250,975 PPS at 40Gbps, and 8,127,438 PPS at 100gpbs.

Now our per packet budget is somewhat larger. At 10Gbps we have 1,230 ns to process each 1538 byte packet, at 40Gbps we have 307 ns, and at 100Gbps, 123 ns. For a single core clocked at 2GHz, this equates to 2,460 instructions at 10Gbps, 614 instructions at 40Gbps and 246 instructions to handle 100Gbps.

If only there were a simple way to gain more instructions per unit of time.

Enter overclocking.

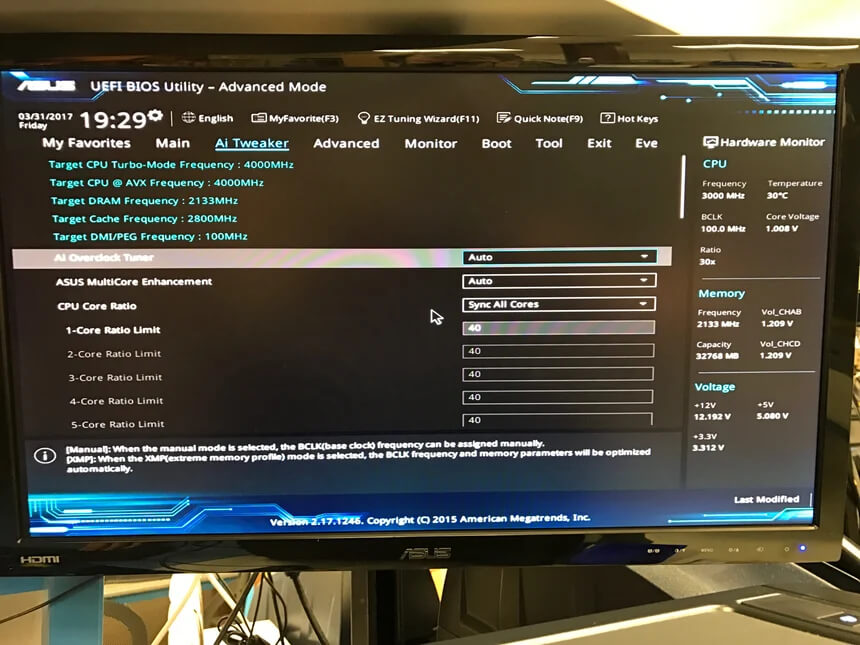

Remember that the title of the OSCON talk to be delivered on 11 May includes the phrase “on commodity hardware”. In order to be true to the premise, we want to be able to stay with hardware you could buy without going to a large server manufacturer. The Intel i7-6950X has an interesting combination of high core count, a high base frequency of 3.0GHz, 40 PCIe 3.0 lanes, and the ability to be overclocked. While this processor is typically marketed at the gaming and enthusiast community, our interests are on a different axis than generating the ultimate frame rate while playing Crysis 3.

By overclocking an i7-6950X to 4.0GHz, we gain considerable headroom for our packet processing. For 1,500 byte payloads we have 2,460 ns or 4,920 instructions to process each packet at 10Gbps. At 40Gbps we have 614 ns or 1,228 instructions to process each packet.

So let’s build a system that can route 40Gbps Ethernet using an i7-6950X at 4.0GHz while being able to buy the components on Amazon or Newegg.

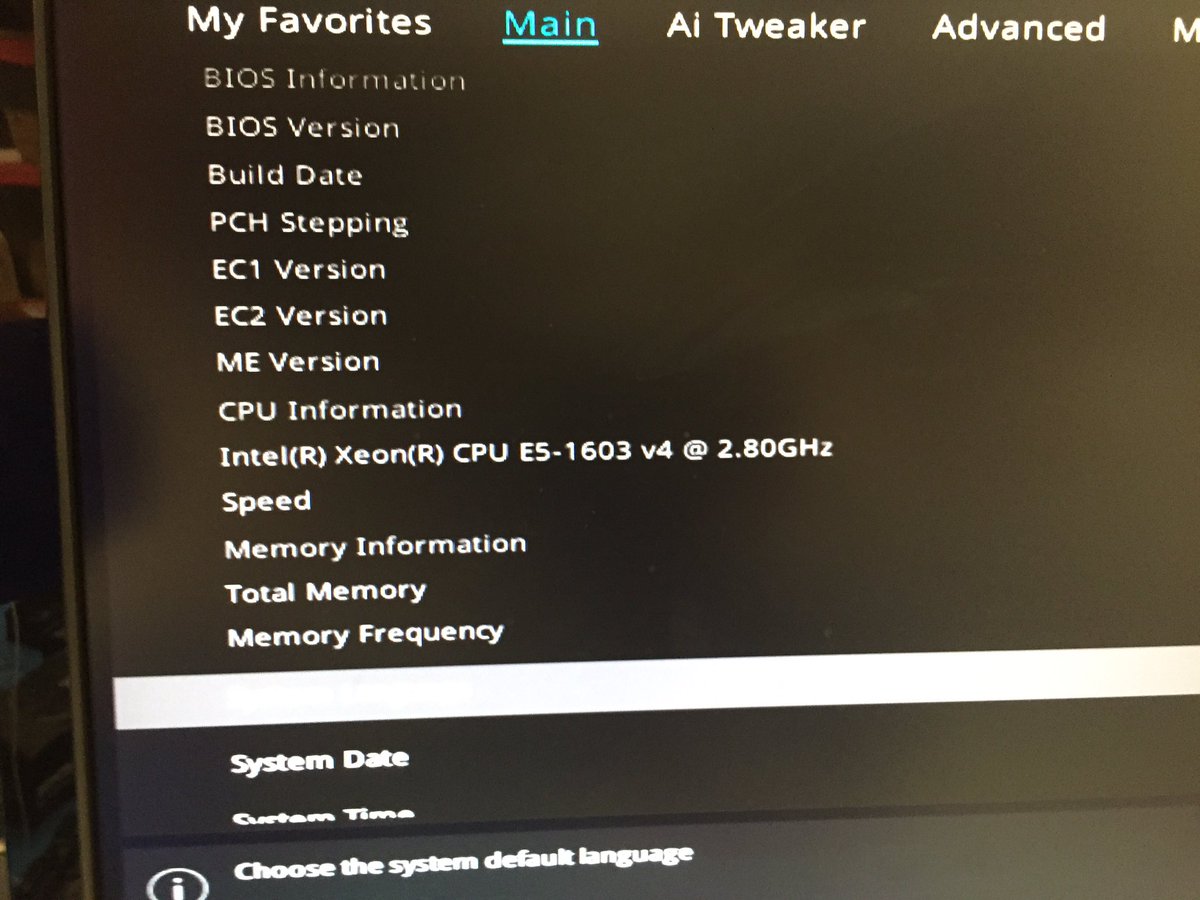

First we’re going to want a motherboard that is socketed for our chosen CPU. Since we’re building a router, we’re going to need a sufficent number of PCIe 3.0 sockets. And, since this is network gear, having out of band managment is a nice feature. We selected the ASUS X99-WS/IPMI motherboard for our behemoth router. This motherboard also supports a NVMe socket for a fast boot drive. We used a 256GB ADATA SX8000 for our simple storage needs.

For memory, we selected 32GB of Corsair’s “Vengence LPC” DDR4 2666MHz RAM.

The i7-6950X has a TDP of 140W, before we attempt to overclock it. At 4.0GHz, we’re going to need to cool nearly 280W. In order to keep the system out of thermal throttling, we’re not going to be able to air-cool the system. We’re going to want water cooling. While more essoteric water cooling systems exist, we decided to go simple, and used a Corsair H110i.

We selected a modular power supply, as they help keep the interior of our build clear of extraneous wires. With over-clocking, water cooling and and high-power NICs, as James T. Kirk often requested of engineering, “We’re going to need more power.” We selected a EVGA 1000 PS power supply.

And, finally, we wrapped the whole thing up in a Corsair Carbine 400C case.

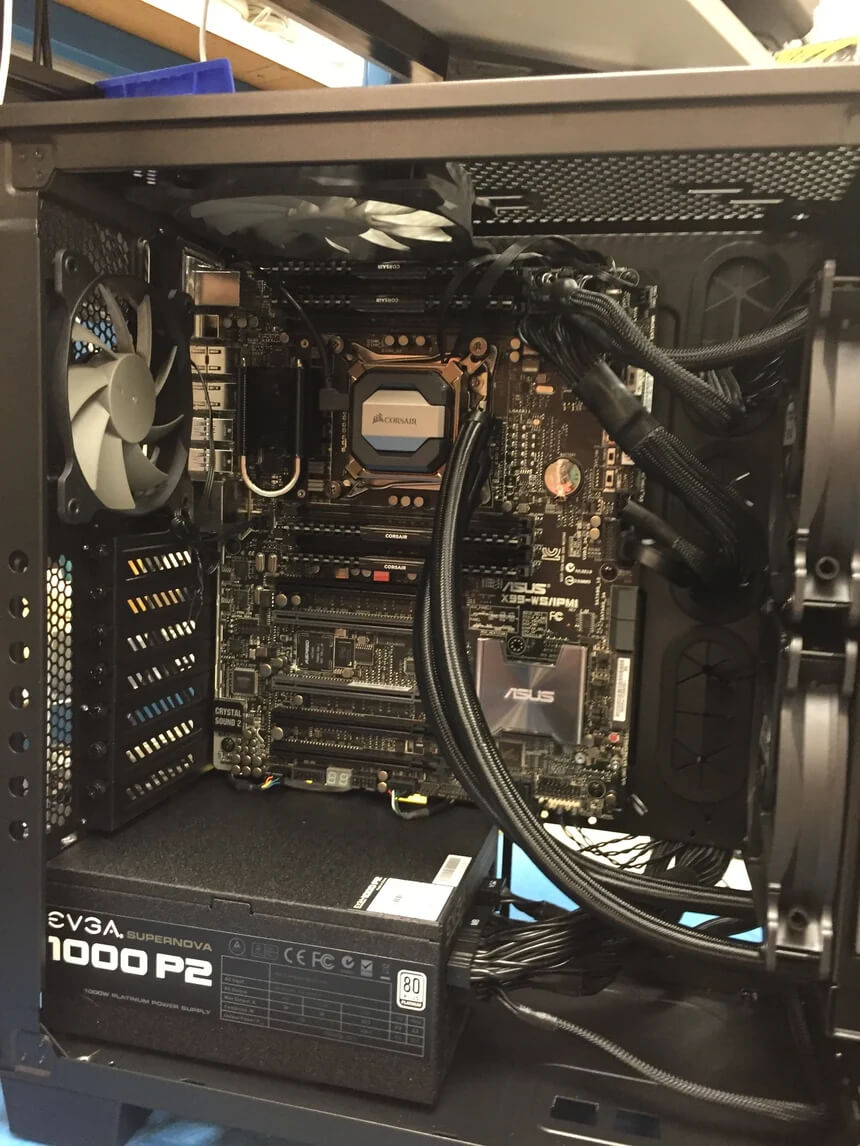

First, the glamour shot, NVMe drives not shown..

You’ll notice we have the components to build two routers. This makes sense when you realize that we’re going to have to test the result, and we’re going to want to run an IPsec tunnel for hosts on a pair of LAN segments.

Next an interior shot of all of the components assembled.

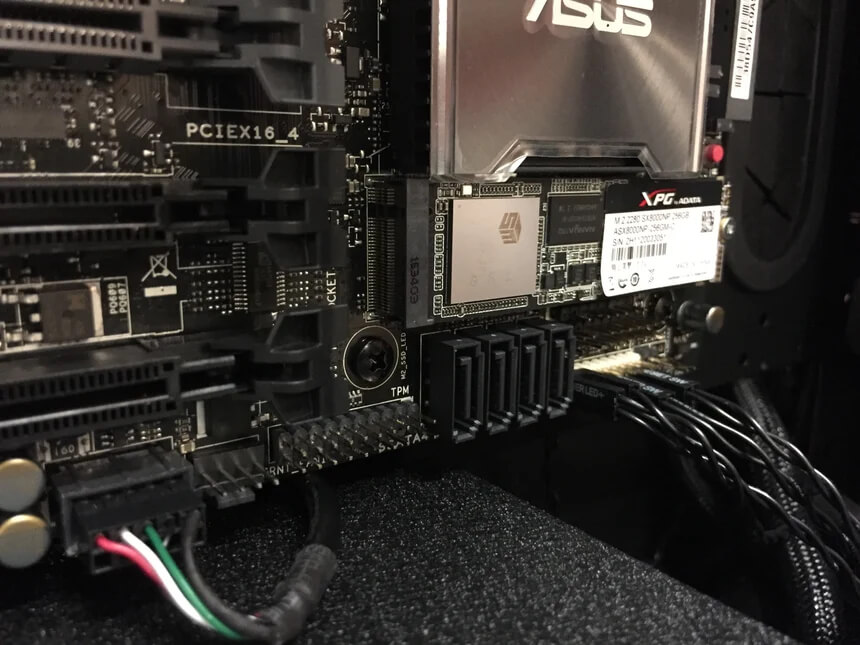

The sharp-eyed reading this post will note that the M.2 drive isn’t installed. In order to asuage your doubts, here is a shot of the M.2.

We did run into a problem with this build. We had sourced the i7s from a seller on Amazon. When we installed the first, it wouldn’t post. Not knowing if we had a bad processor or a bad motherboard, we installed the second i7 in the second motherboard, and noticed something quite curious.

Crap! Amazon sold us a delided CPU! We returned both of the i7-6950X CPUs that we purchased on Amazon, and sourced replacements from a more reputable, and local supplier. Once these were installed, the situation improved.

We subsequently loaded CentOS 7 on both systems, and placed them in our test rack.

A huge shout-out to Hunter S. Thompson for specifying and sourcing the components for this build, as well as building both routers during his Spring Break from his first year of college in Colorado.

Future blog posts will detail the software architecture of the system, our control plane, and performance benchmarking to see if we can reach the goal.

In closing, Happy April Fool’s Day.

-01.png?width=926&height=181&name=Netgate%20Logo%20PMS%20(horizontal)-01.png)

%201.png?width=302&name=Netgate%20Logo%20PMS%20(horizontal)%201.png)